Sunday Recap

Published:

Soft memory limit exceeded

Recently I starting working with QM/MM Umbrella Sampling calculations. I use Amber for the Molecular Mechanics part and Terachem for the electronic structure calculations. The communication between the two softwares is not a trivial issue but has been solved sufficiently with the terachem-protocol-buffer (TCPB). Also, my job scheduler is Slurm. The amount of files produced during these runs and the memory used is massive. 20 umbrella windows alone produced ~15,000,000 files and 2.6Tb of memory which means that I started recieving the notorious “exceeded memory limits” emails from the IT department. Naturally, the question rises: How do I rigorously monitor the jobs running, their memory usage and the number of files generated?

To understand how much memory these files occupied and also to measure their number, I wrote this bash scirpt (also added timing because the du -sh command is pretty slow):

#!/bin/bash

MeasureMemory() {

read -p "Enter index1:" index1

read -p "Enter index2:" index2

read -p "Enter step:" step

total=0

for (( i = index1; i <= index2; i+= step )); do

start=$(date +%s)

output=$(du -sh "d_${i}_0_")

echo "$output"

num=$(echo "$output" | awk '{ if (match($0, /[0-9]+/)) print substr($0, RSTART, RLENGTH) }')

total=$((total + num))

end=$(date +%s)

elapsed=$(echo "$end - $start" | bc)

echo "Iteration ${i} took ${elapsed} seconds to finish!"

done

echo "Total memory is: ${total}G"

}

NumberOfFiles() {

read -p "Enter index1:" index1

read -p "Enter index2:" index2

read -p "Enter step:" step

total=0

for (( i = index1; i <= index2; i+= step )); do

count=$(find "d_${i}_0_" -type f | wc -l)

total=$((total + count))

echo "Directory d_${i}_0 contains ${count} files."

done

echo "Total number of files is: ${total}"

}

MeasureMemory

NumberOfFiles

Very cool things I learned writing these script were:

- Bash performs brace expansion before any variable substitution so you need to use C-style loops when you have variables.

- The command date +%s outputs the current time as a Unix timestamp, which is the number of seconds that have elapsed since January 1, 1970 (known as the Unix epoch).

- You can use awk’s regular expression matching to extract the integer part from a string. Other (easier) ways exist.

- Not in the script but I used this very cool Linux command sort.

This script allowed me to gauge how much memory this run will take on average and I concluded that I had enough memory before hitting the hard limit. From now on, I would like to make these more rigorous by sending a test run for ~20 steps. Then, I will extrapolate the memory it will take to complete and will compare with the total number of available space I have in the work directory. To be added to the imaginary to-do list that I never have time to actually do-do.

Lastly, there is a nice little manual provided by the HPC I use at UNC@ChapelHill that briefly explains all the ways you can monitor your jobs running on GPUS.

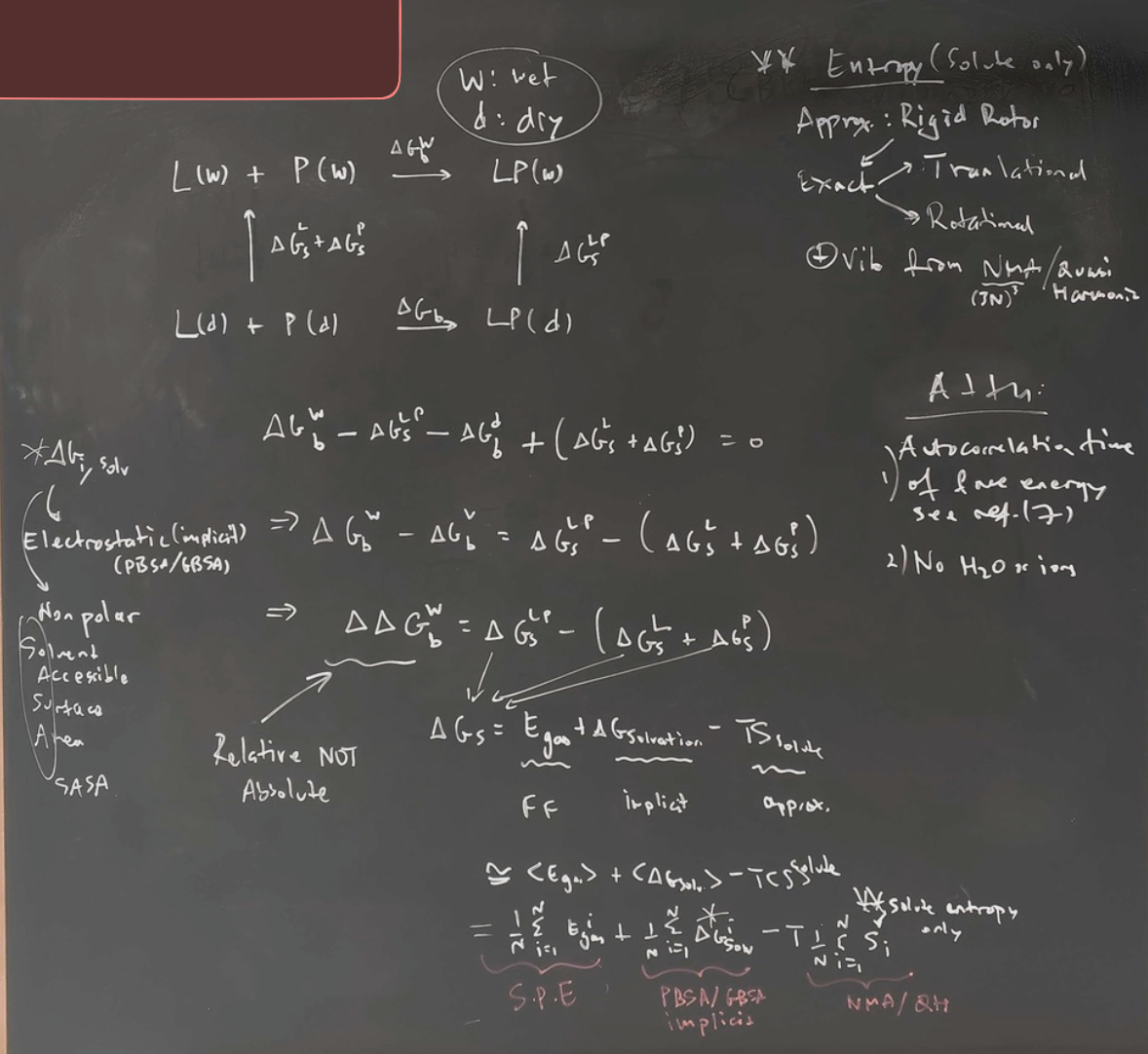

End-state free energy methods

While not the most accurate (limitations articulated very nicely in this paper) end-state methods are fast and can give reasonable answers for large systems. I read and summarized the original paper of the MMPBSA method in this diagram drawn in my office’s blackboard: